How do media organisations responsibly report on AI-generated disinformation without inadvertently spreading the very content they seek to expose? My Churchill Fellowship research reveals this fundamental challenge facing journalism in the AI age, where traditional reporting practices may require significant adaptation.

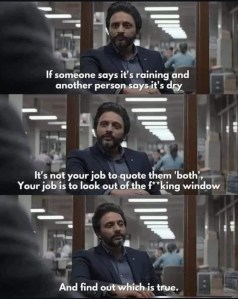

Current media coverage of AI-enabled disinformation often follows familiar patterns—linking to original sources, providing detailed descriptions of false content, or presenting threats in ways that might increase rather than decrease public vulnerability. Yet evidence suggests these approaches sometimes achieve the opposite of their intended effect, amplifying false narratives while attempting to debunk them.

My research explores how media entities might develop responsible AI disinformation reporting protocols that inform the public about information threats without contributing to their spread. This requires understanding the difference between exposure and inoculation—helping audiences recognise manipulation techniques without providing blueprints for replication.

My research also explores the difference between how “traditional” journalists and digital content creators engage with electoral events, and recognises that both play their part, especially these days when many younger people get a lot of their news and current affairs information from TikTok and Instagram Reels.

But media challenges extend beyond disinformation coverage to fundamental questions about electoral reporting priorities. The Citizens’ Agenda model developed by Jay Rosen offers one alternative approach, involving direct community engagement to understand information priorities and structuring coverage accordingly. Rather than focusing on horse-race dynamics, this model prioritises substantive policy impacts that voters identify as most important.

My upcoming Churchill report examines whether such approaches might counter AI-driven tendencies toward sensationalised, algorithm-optimised content that often drowns out substantive democratic discourse. How might journalists – and responsible content creators – contribute to the elections conversation while remaining economically viable in an attention-driven media landscape?

These questions become more pressing as AI capabilities advance and democratise. What responsibility do media organisations bear for the information environment they help create? How might journalists and content creators contribute to rather than detract from informed democratic participation in an age of artificial intelligence?

What role should media play in fostering democratic resilience rather than simply reporting on its erosion?